SPR: Artificial Intelligence and Psychotherapy Research

Challenges, Opportunities and Future Research

Once teaching finishes at the end of an academic year, the conference season seems to begin. This weekend has been the time of the Society for Psychotherapy Research annual conference (maybe see my earlier blog about what research conferences are if this isn’t an area that you are familiar with). This is a beast of an event, with multiple strands of content happening at the same time.

This year I have one presentation at the event focusing upon the role that artificial intelligence (AI) is having upon the world of counselling and psychotherapy research. This was a joint session with a few lovely colleagues that I have been working with for the last few years:

Dr Julie Prescott, Head of Psychology at the University of Law

Dr Xia Cui, Lecturer in Software Engineering at Manchester Metropolitan University

Dr Louisa Salhi, Head of Research at Kooth.

Below is a quick summary with some key points from the session.

AI and Therapy?

What do people think of the idea of combing AI and therapy? Firstly it seems like a number of people were interested in that question. The room ended up being very full - there was a surreal moment when an attendee crawled past when I was talking so that they could sit in front of the chairs! Anyhow, that weirdness aside, probably unsurprisingly, the people in the room were mixed in their response to the idea of making use of AI in therapy (see the word could below).

As is evident, some people saw it to be exciting, innovative and promising. Others viewed it as a threat, weird and scary. This was not surprising at all and reflects the findings of a paper that Julie Prescott and I had put together earlier this year. Although, if you do look at this paper, the responses were probably more extreme (particularly the negative ones).

Our presentation had a few elements:

A brief introduction to the way that therapy has engaged with technologies over the years by me - see a paper covering this in a bit more detail here. As the technology wasn’t exactly being kind to us on the day, I had to create a brief video of me talking to ChatGPT role playing a therapist - you can also see this 2 min clip here if you really want (although you may need to log in to Vimeo).

Then Julie Prescott moved on to talk about the world of cyber psychology. Here Julie talked about some of the developments in this arena and introduced the idea of Artificial Wisdom instead of AI. Maybe in the future we will be talking about AW instead. Maybe? (Strangely Julie didn’t mention anything about the paper that we have written about the subject matter in question). Oh Julie did also mention some work developing a chat bot training facility called ERIC too - a tool for trainees to learn about person-centred core conditions. This is very simplistic but has received positive feedback in proof of concept testing.

Xia then came into the discussion to provide a computer scientist perspective on things. Xia provided an overview of how things like ChatGPT work - I won’t try to summarise as this isn’t really my area! She then went on to discuss

a piece of work we have worked together on in which a programme listens to real time speech with a view of identifying/highlighting vulnerability indicators. Whilst the prototype of this is pretty limited at present, the concept has quite a lot of potential for future developments.

Louisa from Kooth gave an organisational perspective. Needless to say the idea of scalability is very attractive to organisations. However, Louisa discussed some of the ethical challenges associated with removing humans from the decision making process. She talked specifically about the cautious approach having automated moderation processes for some of their online forums.

I then tried to sum up things a bit. The key points I mentioned were (i) that ChatGPT isn’t going to take over the role of therapists any time soon. AI might however be used to support practitioners’ work or to scaffold training. The ways in which this might be could be vast. Then (ii) including tools such as these into therapeutic work might be viewed as developing a ‘therapy bundle’ - similar to the way that phone companies sell bundles of different resources, therapists might do a similar process.

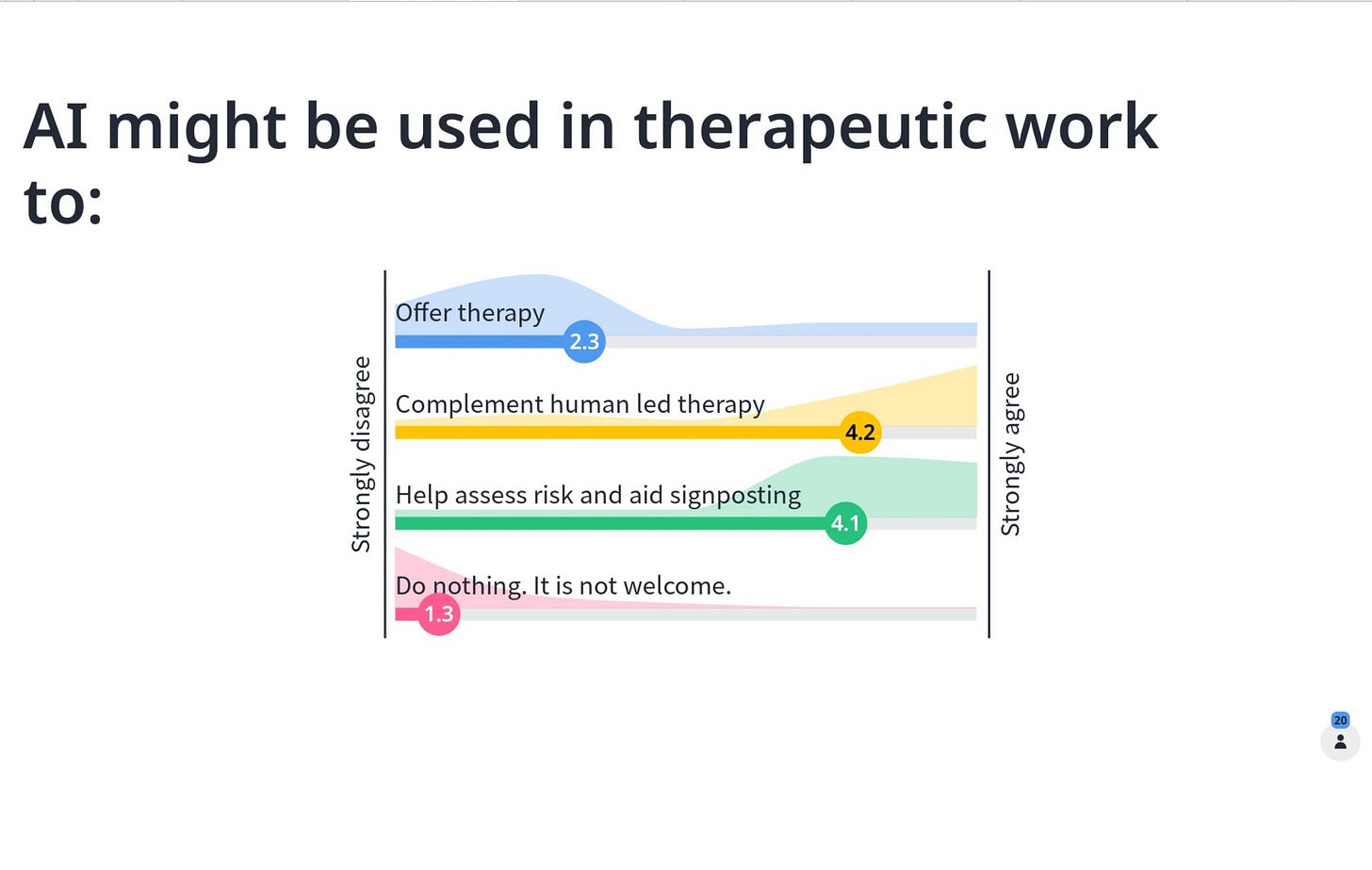

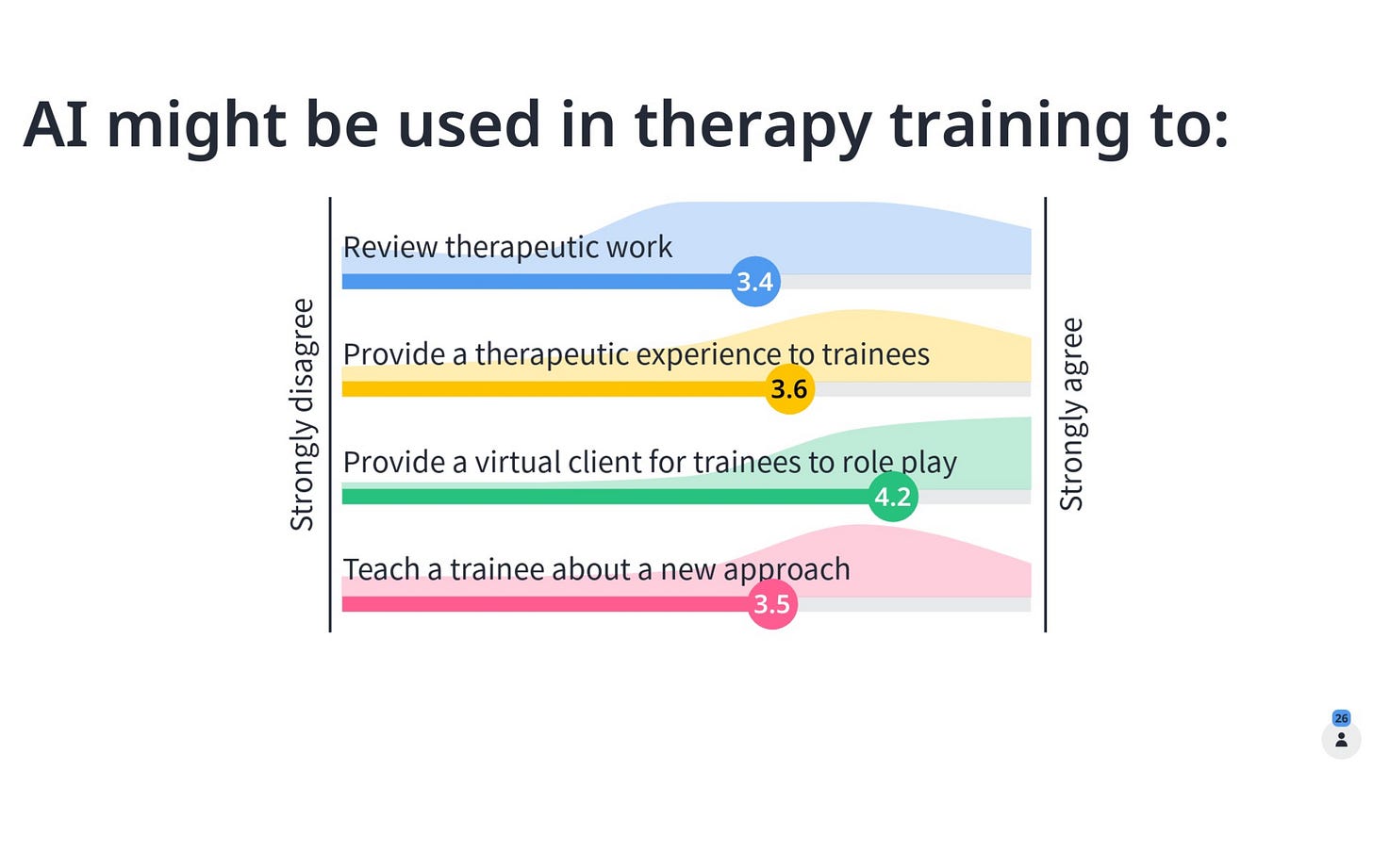

At the end of this, we then asked those attending what their views were of the way that therapists might use AI in the future - to give an indication of the way that research might be best directed. Below are averages of the responses that a chunk of the audience had. Interestingly, the audience was generally quite positive towards the use of AI.

A final note

Just to end, it’s been interesting to see that there is a scattering of work in this area at the conference. The use of machine learning is increasingly being considered as a means to managing the increasingly data rich society that we live in. Everything seems speculative at present, but given the pace of developments, it only seems a matter of time before there is some sort of significant use.

I’ll end there. There are a few more sessions to go before the conference ends and we all go our separate ways, everyone full to the brim with psychotherapy research.