It’s the start of the academic year and I’ve been thinking about whether we can replace human teachers with robots to train the therapist of the future. Obviously that’s not the case, as I’d be out of a job, but I have been considering how different technologies might be used as a form of teaching. Below I reflect briefly on three existing technologies that might be used to support therapists in learning a bit about the therapeutic process.

ELIZA - a simple chat bot

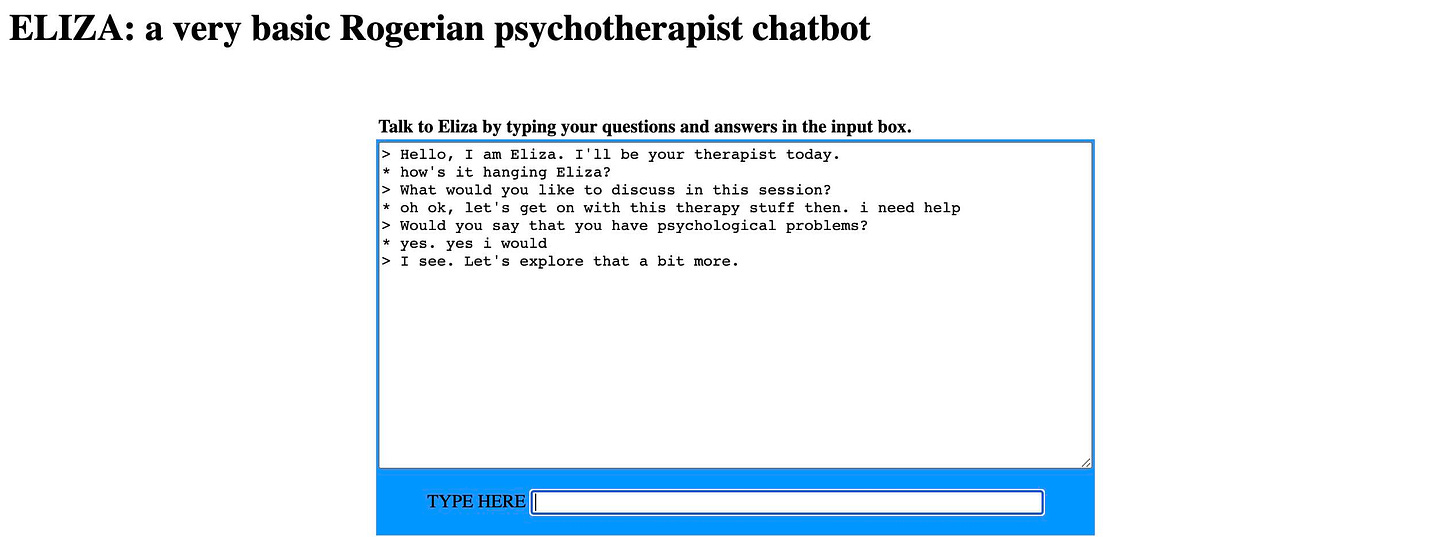

I’ve written about ELIZA before and, in all honesty, it will always have a special place in my therapy training world. It makes me smile, chuckle and has made me consider what person-centred therapy might look like without any of the pitfalls of being human (which I say slightly tongue in cheek).

If you’re not familiar with ELIZA, it was one of the first chatbots to be created during the 1960s. It was programmed using person-centred language rules and, in many ways, just parrots back to you what you have said. It is however interesting to provoke thoughts about what being non-directive might mean, and the way that the nuances around being human shape what therapy becomes. With that in mind, it is an interesting provocation for people learning about the role of a therapist and they way non-directive ways of being might manifest in the therapy room (or how they probably shouldn’t). Do have a play - in fact if you are feeling really adventurous you. might also take things a leap further and create you own therapist bot with MIT’s therapist bot tutorial (nb. I have never tried this).

ERIC - a pre-programmed chat resource

The next type of resource I want to talk about is a chatbot that I’ve been involved in creating called ERIC - Emotionally Responsive Interactive Client. ERIC is a relatively simple programme that get trainees to consider a series of prompts that aid contemplation about therapeutic concepts - currently, considering the core conditions articulated by Carl Rogers (that’s empathy, unconditional positive regard and congruence if that’s not your territory). This was created as a bit of a test tool to see how trainees might get on with such a resource.

Now, this is nothing revolutionary at all. It essentially follows a series pre-programmed orders - if X then go to Y type stuff. In a recent project exploring how trainee therapists got on with such a tool, most comments were quite positive. This is the kind of thing trainee’s reported to be positive about the resource:

“the chatbot is an opportunity to practice skills; identifies what skills you are using; also shows inappropriate responses which is a useful learning aid; links to Counselling Tutor embedded in module sessions; links to additional learning material; able to practice when not with peers”

There is certainly room for improvement, but it’s interesting to reflect upon how trainee’s might use a tool such as this outside of the classroom - it’s certainly more dynamic than some of the book chapters I’ve written. The future could include further developments to teach theory/theoretical concepts or it could get even more sophisticated.

ChatGPT - chat based upon large language models

Have you ever just wanted your own personal tutor. Someone who seems to have a bottomless pit of knowledge to share with you, doesn’t judge your silly questions and is available every hour of the day. I think many would say, yes please to that. Well, ChatGPT can be that resource (sort of).

Over recent months I’ve been playing with ChatGPT and find it to be a novel and engaging tool - also see this blog. For instance, I’ve asked it to tell me about the person-centred therapeutic approach and it talks me through what it knows about this theory quite well. Is it accurate, I hear you ask? Well yes, generally it’s not that bad. Unsurprising it is not always that nuanced, but as its knowledge base extends, I guess this could come. Naomi Klein describes these large language models, that are essentially developed using the literatures developed by humans over millennia, as the “largest and most consequential theft in human history”. Gulp! How this will continue, be regulated, or make moral judgements about what information to prioritise is mind bogglingly complex - although I’m pretty sure that when developers such as Elon Musk say it needs to be regulated, we also need to ensure that it is not the same people doing the regulating. Also, I’m not touching upon the huge energy consumption, and thus energy cost, associated with using AI here. For now, whilst I acknowledge that I am exploring this territory with some blinkers on, I think it is important that those interested in therapy keep abreast of some of these developments. With that in mind, do have a play and see what you think.

To end…

That’s it for now. I’m sure there are other things that could be added to the list (if you know of them, do feel free to add them in the comments section below) but I hope that gives you something to think about. The pedagogy of teaching anyone, not just therapists, continues to evolve and we’re just starting to see some of these tools become commonplace due to way that these technologies are becoming easier to access and use. No doubt this will continue to evolve and other tools will become available - for instance, tools could be developed to listen to therapy conversations and highlight certain risk indicators that arise in sessions that might be missed, or a tool that could provide automated feedback based upon the session (e.g. how non-directive were you in your language etc). Now there are ethics galore to unpack about such tools, but if they are safe and help individuals develop their therapeutic skills, then I’m in. What tools would you have developed if you were in charge and had the resources?

That's very person-centred of you - to be open to new experiences knowing that the facts are friendly! Thank you for discouraging my inner luddite